| Author | Message | |||||

Archie Campbell (amc1) New member Username: amc1 Post Number: 1 Registered: 03-2008 |

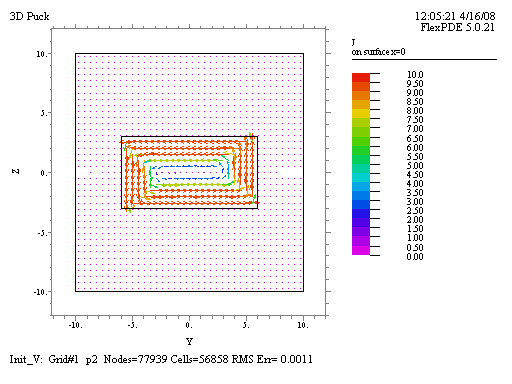

M problem is that in moving from 2D to 3D the time to reach a solution has become unmanageably long, and for the most interesting case no solution is reached. The problem has four variables, the three components of the magnetic vector potential plus the electrostatic potential, has a 3D mesh and is non-linear, and so is pretty challenging. The only comparable work published used Flux 3D which is much more expensive and appeared to require considerable additions to the basic code, so perhaps I am expecting too much, but I am encouraged by the fact that even with default values FlexPDE can produce a result. The problem involves EM fields in superconductors and the basic equation is the spatial part of the diffusion equation ( no time dependence).. The diffusion coefficient contains an exponential but this is always less than unity. It depends on a material parameter ‘d’ which determines the sharpness of the transition between plus and minus Jc on the right hand side of the equation. If d is large the equation is the linear diffusion equation so that staging d from large to small has proved very effective in avoiding instabilities in 2D. The geometry is simple, a disc with a magnetic field applied parallel to the faces of the disc. My question is why does a 3D system take so long, when the number of nodes (about 20K ) is similar to the 2D version and how can I decrease the time required? I attach two files 3dstart is for a field applied from zero. This took five hours with a low accuracy. The monitors produced reasonable figures much more quickly and the programme spent a lot of time on things which had little effect and did not take time in 2D. It was almost impossible to interrupt the programme without removing the dongle. Is there useful diagnostic information in the left of the screen where things like ‘non-linear 49’ or ‘Lyczos’ appear which are not referred to in the manuals? I also attach the log for this. Speeding this up would be useful but the real goal is to take the previous result as a starting point and then change the field in small steps, as in ‘3dsteps’ attached. As the system is hysteretic this means that the equations are similar but are in terms of the difference between the potential and the previous value, which has been transferred from the previous programme or stage. This programme ran for five days after which the PC gave up trying. I suspect part of the problem is that at every step it needs to interpolate the value of A from the transferred values since the mesh has changed. Is there a way of locking the mesh so that the mesh in the two programmes is identical and only a look-up table is required? This would be useful in a separate problem where I think that repeated interpolations over many cycles are building up errors.

| |||||

Robert G. Nelson (rgnelson) Moderator Username: rgnelson Post Number: 1102 Registered: 06-2003 |

The finite element method solves for a distribution of nodal values for which the integrals of the PDE residuals are minimum using the interpolation functions internal to the cells. With several variables and coarse cells, there can be more than one combination of nodal values that have similar residual integrals. None of these are really the "solution", because the interpolators cannot properly resolve the shape of the solution. But this condition can cause the iterative solver to struggle. This is one guess why your system runs slowly. Having struggled to an approximate solution, the error measures will then require a mesh refinement and re-solve, which again adds to the run time. Based on this assumption, I tried increasing the mesh density in the puck and turning off the regrid. Of course, increasing the mesh size also increases the run time, so it would appear to be a lose-lose proposition. It may be possible to tune the mesh to put the cells only on the surface, or some such strategy, but I have not experimented with this. I did a preliminary run with no V equation (39 minutes on my 1.5 GHz Athlon), and used this as a starting value for a run including the V equation (22 hours) with errlim defaulted to 0.002. I realize this is not overall much faster than the times you quote, but the answers look reasonably clean, so maybe it's worth it. I have not tried to extend this run to the staged hysteretic system.

| |||||

Robert G. Nelson (rgnelson) Moderator Username: rgnelson Post Number: 1104 Registered: 06-2003 |

You can avoid the cost of frequent lookups of transfer values by using a SAVE command: Transfer("name",Ax00) Ax0 = SAVE(Ax00) The SAVE() causes its argument to be evaluated and stored at each mesh node. References to the SAVEd data are then interpolated in each cell from the nodal values. This is somewhat wasteful of memory, as both the transfer data and the SAVE data are held permanently. But the transfer lookup is done only once per grid. Caveat: In version 5, SAVE does not respect discontinuities at material interfaces, and will smear or overshoot discontinuous values. | |||||

Archie Campbell (amc1) New member Username: amc1 Post Number: 2 Registered: 03-2008 |

Thanks, I will try these out. |